16x Eval Use Cases

See how 16x Eval can help you evaluate and compare AI models for different tasks

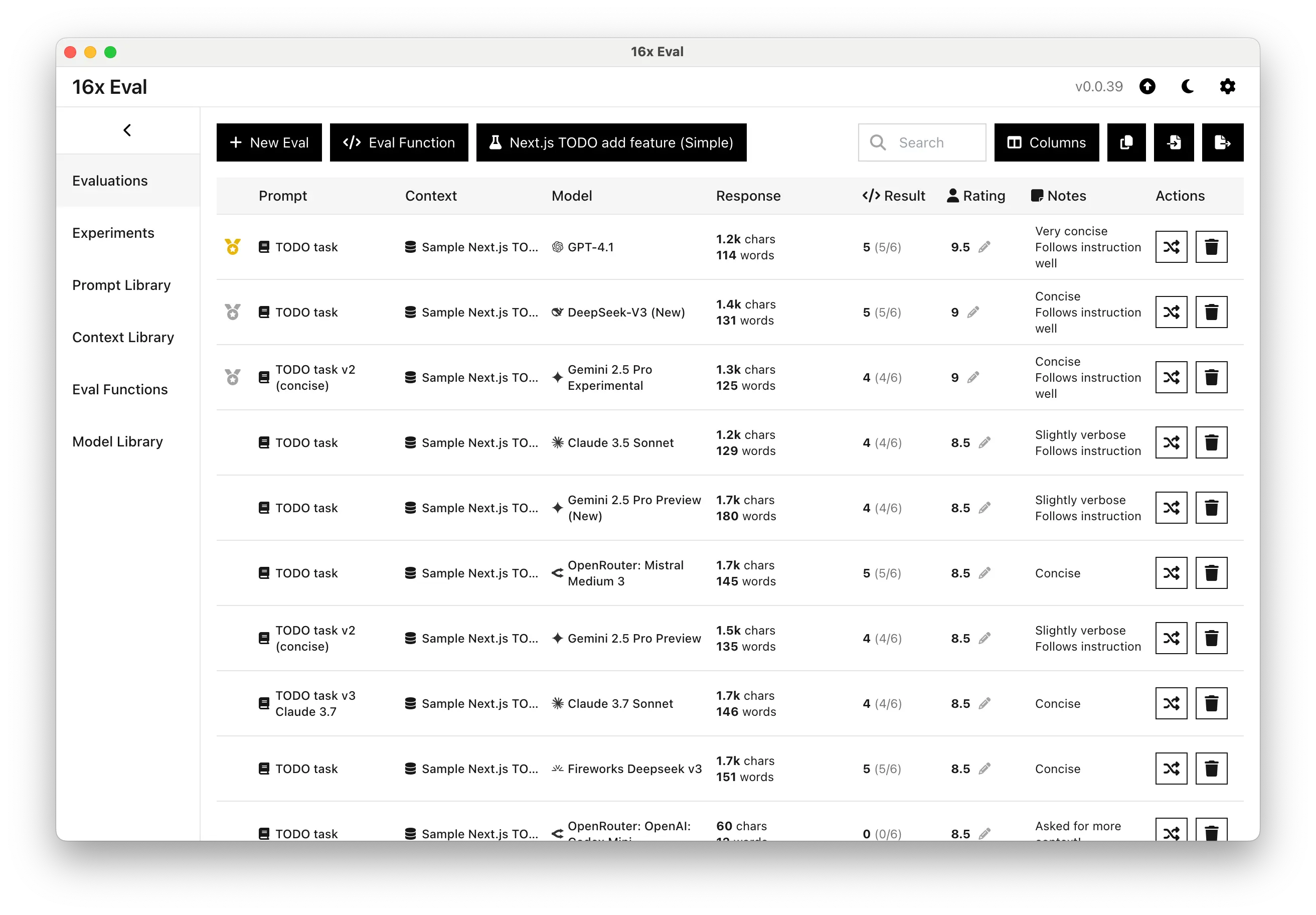

Coding Task Evaluation

Coding experiment with prompt to add a feature to a TODO app

Evaluate and compare AI models for coding tasks. Perfect for developers who want to assess the quality and accuracy of AI-generated code.

- Compare multiple models

- Test different prompts

- Custom evaluation functions

- Response rating system

- Add notes to each response

- Various token statistics

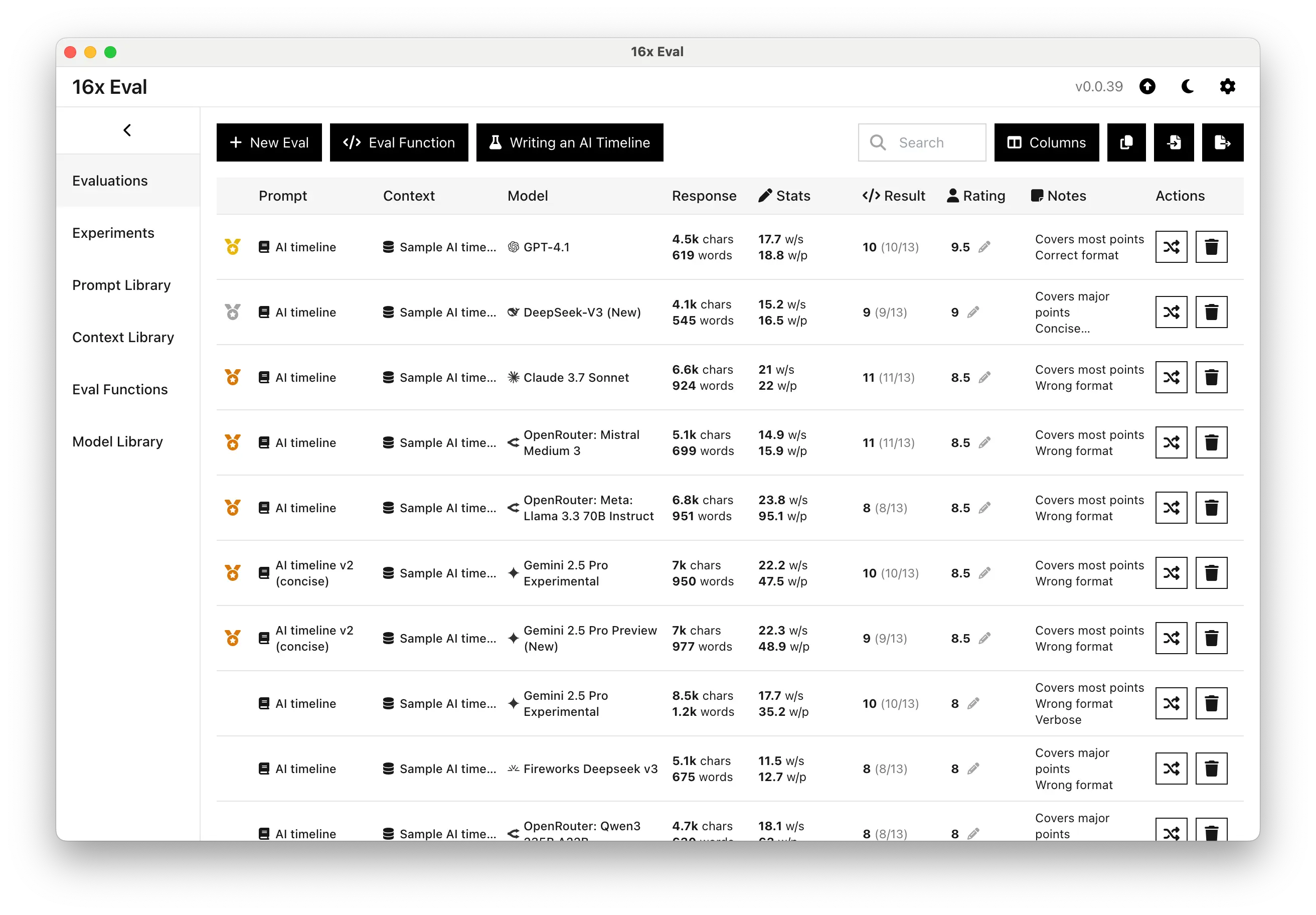

Writing Task Evaluation

Watch a video demo of writing task evaluation

Writing experiment with prompt to write an AI timeline

Compare AI models for writing tasks. Ideal for content creators, writers, and AI builders using AI-assisted workflows for writing.

- Compare multiple models

- Test different prompts

- Highlight target text and penalty text

- Writing statistics (words per sentence and paragraph)

- Custom evaluation functions

- Add notes to each response

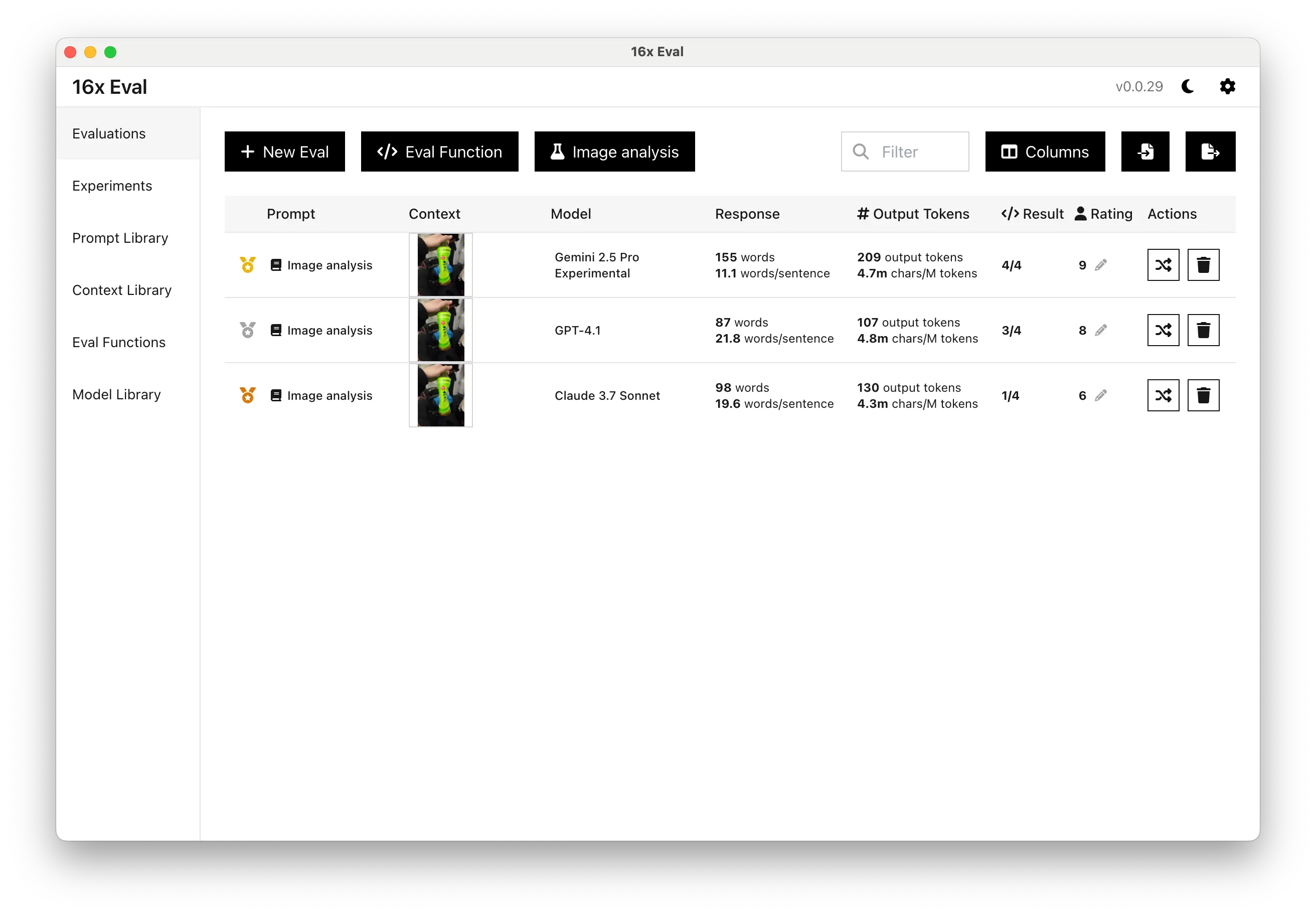

Image Analysis Task Evaluation

Watch a video demo of image analysis task evaluation

Image analysis experiment with prompt "Explain what happened in the image."

Evaluate AI models for image analysis tasks. Great for AI builders and researchers assessing how different AI models interpret and analyze visual content.

- Visual content analysis

- Multiple model comparison

- Custom evaluation criteria

- Response rating system